Beyond the Hype: What Enterprise AI Platforms Must Deliver for Predictable Autonomy

Autonomous AI agents are moving rapidly from experiments to enterprise pilots. But autonomy alone isn’t enough — business leaders need predictable, safe, and controllable autonomy before they can trust agents in production.

ENTERPRISE AI

Anand Saranath

11/9/20252 min read

Introduction

Autonomous AI agents are moving rapidly from experiments to enterprise pilots. But autonomy alone isn’t enough — business leaders need predictable, safe, and controllable autonomy before they can trust agents in production.

Two things caught my attention recently:

The report from Broadcom/VMware (State of AI Transformation) shows that most enterprises are either buying or building AI platforms to operationalize AI. It highlights that modernization of legacy systems, improved developer experience, observability, and cost/compliance controls are now foundational to AI success.

An insightful article on The New Stack — 5 Factors for Predictable Autonomy with Agentic AI — that explains how to make AI agents trustworthy.

Taken together, they show a clear message: enterprise AI isn’t just about models. It’s about platforms and architecture that make autonomy reliable.

The 5 Factors for Predictable Autonomy (from The New Stack)

Bounded & Well-Defined Contexts – keep agents focused on clearly scoped tasks to reduce drift.

Controlled Tooling & Interfaces – strictly govern which APIs/tools agents can access.

Structured Prompts & Guardrails – use templates, constraints, and policy-driven instructions instead of free-form prompts.

Right-Sized Models & Hybrid Strategy – match model capability to the task; balance public, hyperscaler, and private models.

Monitoring, Feedback & Governance – embed observability and oversight so agents aren’t black boxes.

These are useful guardrails — but they’re only practical if the underlying platform supports them.

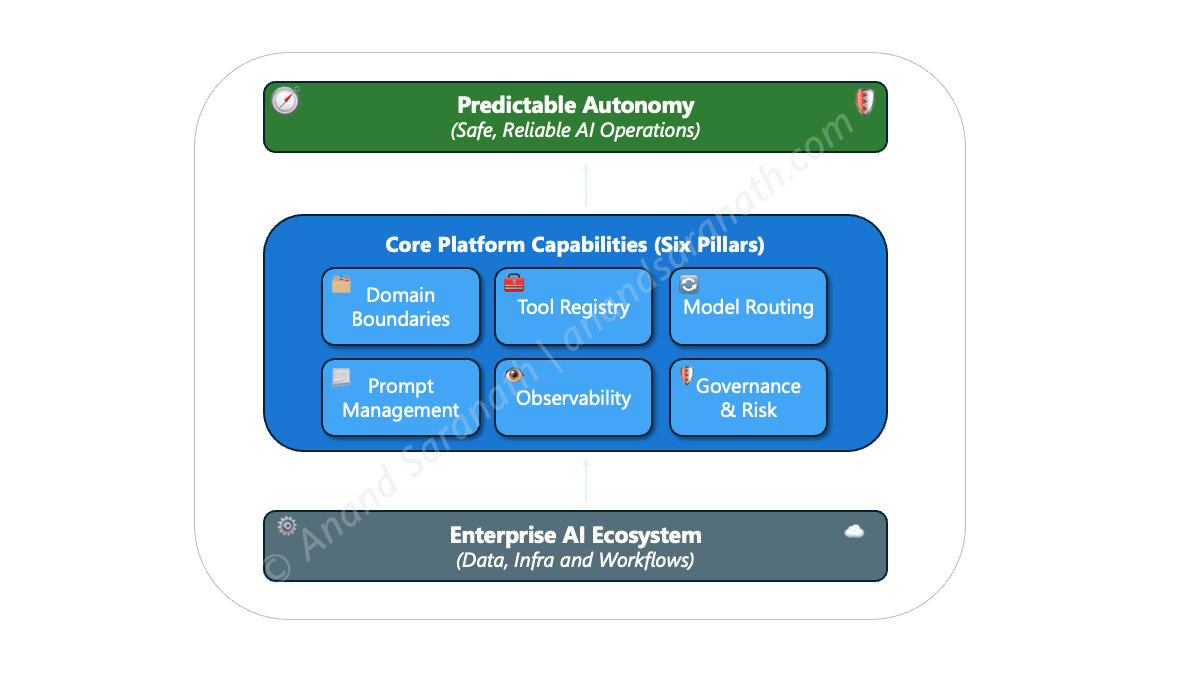

What a Robust Enterprise AI Platform Should Provide

Enterprises adopting agentic AI at scale need more than experimentation environments. A production-ready AI platform (whether built or bought) should enable at least these capabilities:

1. Domain Boundaries & Policy Enforcement

Ability to define agent scopes (business capabilities or workflows).

Enforce data access rules and API limits per domain.

2. Tool Registry & Safe Integration Layer

A catalog of approved tools/APIs an agent can invoke.

Version control, access approval workflows, and usage logging.

3. Prompt Management & Guardrails

Maintain versioned, reusable prompt configurations tied to each agent’s domain and capabilities.

Support policy injection (safety, compliance, tone) during prompt assembly.

Perform runtime validation of prompts for security, compliance, and safety before model calls.

Provide audit history and rollback to track and revert prompt changes.

4. Model Orchestration & Routing

Support multiple LLMs (public, hyperscaler-hosted, private).

Route dynamically based on cost, compliance, latency, and capability.

Allow model versioning and fallback strategies.

5. Observability & Feedback Loops

End-to-end tracing of agent actions (requests, decisions, outputs).

Integration with OpenTelemetry / monitoring stacks.

Capture human feedback & ratings for continuous tuning.

Audit logs for compliance and risk review.

6. Governance & Risk Controls

Approval workflows for new tools, models, or prompt changes.

Integration with enterprise security & compliance policies.

Support self-service agent registration with metadata (domain, owner, tools, models).

Run automated policy checks (security, compliance, cost) at onboarding.

Provide central dashboards for approval, lifecycle, usage quotas / spend limits, track limits and risk monitoring at scale.

When these are built into the platform, the five factors move from theory to operational reality.

Why This Matters Now

The Broadcom/VMware report shows enterprises at a crossroads: they’re buying or building AI platforms to go from experimentation to real impact. Those platforms will define how safe and predictable agentic AI becomes.

This is no longer just an AI/ML conversation — it’s a system architecture challenge. Just as we applied DevOps, microservices, and observability to transform software delivery, we now need governed AI platforms to transform how agents operate in the enterprise.

Closing Thought

Autonomy can’t be left to chance. To scale agentic AI responsibly, enterprises must invest in platforms that enforce boundaries, tool safety, prompt discipline, hybrid model orchestration, and deep observability.

Architects, engineering leaders, and platform teams have a unique opportunity — and responsibility — to design these guardrails early.

👉 How is your organization approaching the move from AI experimentation to predictable autonomy? What’s the hardest platform capability to get right: tool governance, model routing, or observability?